Making each frame into an I-Frame dramatically increases the file size, so we recommend encoding multiple versions of your asset:

Add I-Frames to every frame by adding -g 1 to the FFmpeg command. By default FFmpeg places them at most 250 frames apart, which leaves large gaps on the timeline where the player doesn't have a complete frame to reference. Quickly scrubbing H.264 videos requires frequent I-Frames, which are useful when setting reconstruction settings or blocking. Optimizing for scrubbing in Unity & Proxy workflow If you have calculated the height automatically, verify the video resolution by right clicking the video file, selecting properties, and viewing the frame height/width under Details. To edit these values, open the metadata file which was exported with the Image Sequence in a text editor and scroll to the very bottom of the file.Įdit the textureHeight and textureWidth to match the resolution of your re-encoded clip. When resizing a Depthkit clip, you must also edit your metadata file to reflect this change in resolution.

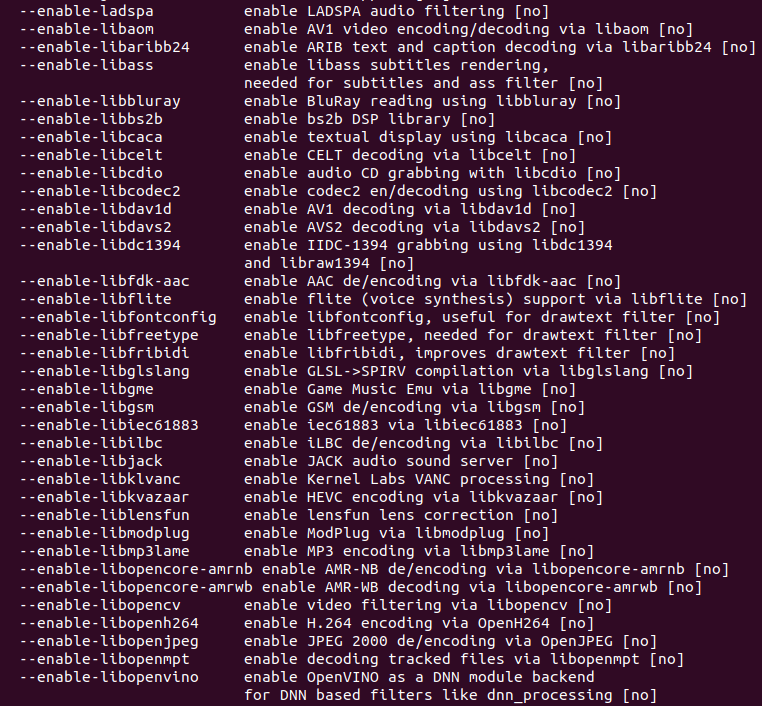

See this documentation from Oculus for further information.ĭownscaling your Combined Per Pixel video files Keep this value at or under 511 to stay within spec. -x264-params mvrange=511 - For H.264 videos, this prevents artifacts generated when the motion vector range (mvrange) exceeds the H.264 level 5.2 specification.-pix_fmt yuv420p - applies the YUV 4:2:0 pixel format, which reduces file size.We recommend 15 for a high quality asset, but dialing this down will reduce file size if needed. It ranges from 0–51 (0 is lossless and 51 is heavily compressed) with a default of 23. Using constant bitrate with -crf 15 (constant rate factor)Īn alternative to encoding a variable bitrate with -b:v (x)M is to use a constant bitrate with -crf 15 (constant rate factor), which targets uniform quality across the clip. You can adjust this based on your preference to balance file size and quality. -b:v 5M (bitrate) - encode with a target bitrate of 5 Mbps.This can be changed to other codecs like libx265 (H.265/HEVC) codec depending on your target publishing platform. -c:v libx264 (codec) - encodes using the libx264 (H.264) codec.If you need original audio available in the output video use -map 0:a before specifying the output file. loop will loop the image through out the video duration and -t will limit the duration of the output video to seconds you want. 1:v refers to the input image and it is overlay with the scaled video to the centre of the image. 0:v refers to the input video and it's width and height being scaled to be 80% of its original. Here you have to use filter_complex and map for overlaying and do the scaling, etc. ffmpeg -i input_video -loop 1 -i input_image -t video_duration -filter_complex "scale=iw*80/100:ih*80/100 overlay=(main_w-overlay_w)/2:(main_h-overlay_h)/2,setpts=PTS-STARTPTS" -c:v libx264 -map output_video

0 kommentar(er)

0 kommentar(er)